Opera unveils feature to access large language models locally

What's the story

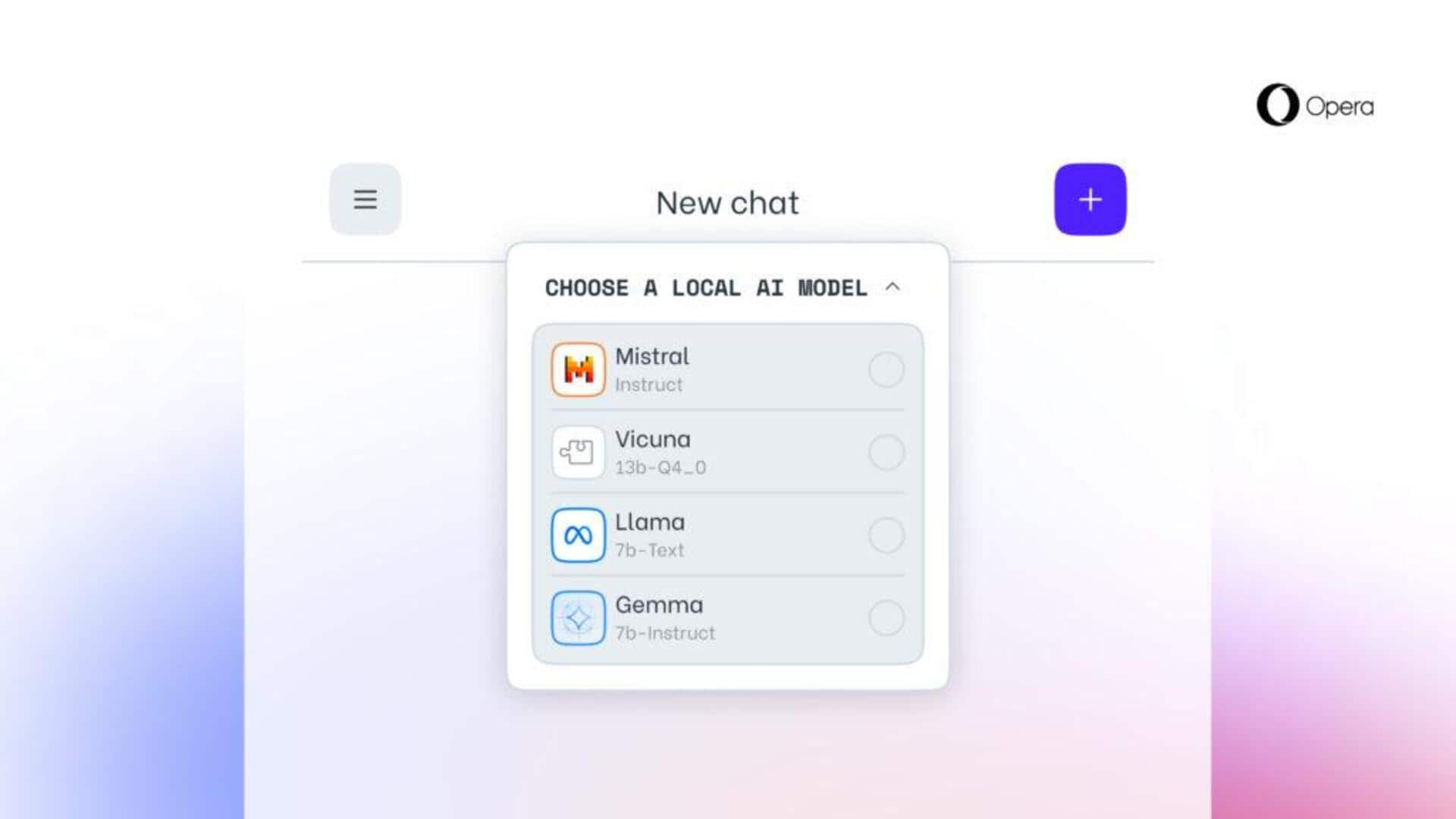

Opera, a leading web browser developer, has introduced a groundbreaking feature, that enables users to access and utilize large language models (LLMs) directly on their personal computers.

Initially available to Opera One users in the developer stream updates, this feature provides access to more than 150 models from over 50 different families.

The selection includes renowned models like Meta's LLaMA and Google's Gemma.

AI integration

Opera's AI Feature Drops Program facilitates LLM access

This innovative feature is part of Opera's AI Feature Drops Program, which provides users with early access to certain artificial intelligence features.

To enable these models on users' computers, Opera is utilizing the Ollama open-source framework within its browser.

Currently, all accessible models are part of Ollama's library, but plans are underway to incorporate models from various other sources in the future.

Storage warning

Opera warns users about storage requirements for LLMs

Opera has cautioned that each model variant would require over 2GB of storage space on a user's local system.

The company encourages users to manage their available space wisely to avoid storage issues, as there are no measures in place to conserve storage during model downloads.

This feature could be beneficial for those planning to test various models locally, but alternatives exist for those concerned about conserving space.

AI advancements

Opera's continued exploration of AI-powered features

Opera has been actively investigating AI-powered features since last year.

In May, it launched an assistant named Aria in the browser's sidebar and later, extended its availability to the iOS variant in August.

Following the EU's Digital Market Act (DMA) request for Apple to abandon the compulsory WebKit engine requirement for mobile browsers, Opera announced plans to develop an AI-backed browser with its own engine for iOS.