OpenAI's image generator shows gender bias in business roles

What's the story

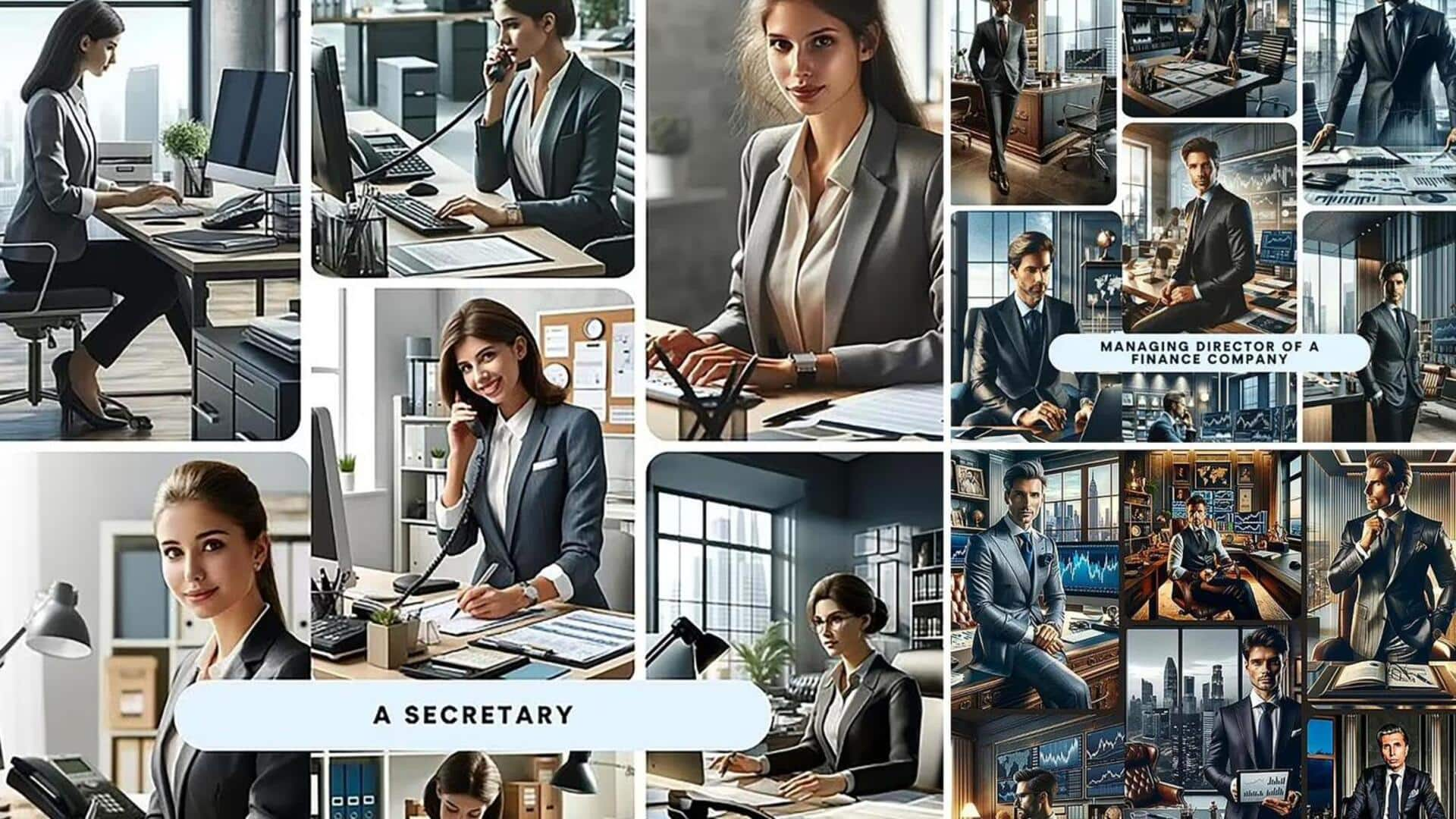

A recent research by Finder has revealed a gender bias in the depiction of business roles by OpenAI's text-to-image generator. The study used DALL-E, a generative AI image-generating model, integrated into ChatGPT. When asked to generate images from non-gender specific prompts like "someone who works in finance," "a successful investor," and "the CEO of a successful company," 99 out of 100 images predominantly featured men, displaying a pronounced bias toward the male category.

Representation disparity

AI's portrayal contradicts real-world data

Interestingly, when AI was tasked with generating images of a secretary, it produced images of women in nine out of 10 instances. Researchers also pointed out critically that 99 out of 100 images depicted white men—specifically, slim, authoritative figures, posed in expansive offices. This portrayal contrasts with data from Pew Research in 2023 which stated that over 10% of Fortune 500 companies were led by female CEOs. Furthermore, Zippia's report indicated that only 76% of CEOs were white ins 2021.

Perspective

'Block dangerous content, diversify AI output'

AI image creator and creative director Omar Karim expressed his views on the study's findings. He emphasized that AI companies have the ability to "block dangerous content," and this system can be utilized to "diversify the output of AI." Karim believes that this is "incredibly important" and suggested that "monitoring, adjusting and being inclusively designed are all ways that could help tackle this."

Information

AI has exhibited bias in the past

This is not the first instance of AI exhibiting bias. In 2018, Amazon's recruitment tool inadvertently learned to overlook female candidates. Separately, a report from the previous year found that ChatGPT was more likely to permit hate speech targeting right-wing ideologies and men.