NVIDIA unveils Blackwell B200, the world's most powerful AI chip

What's the story

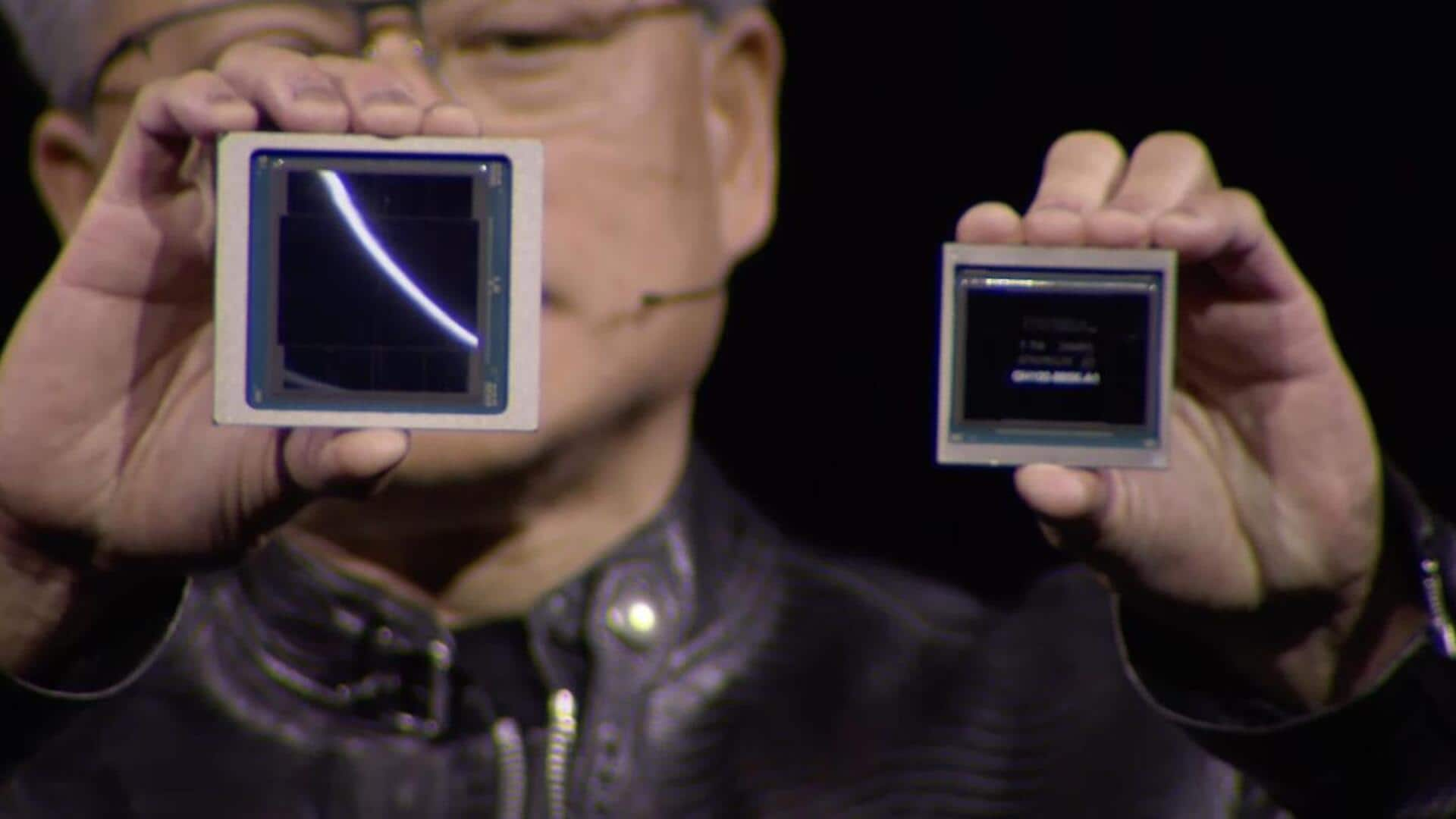

NVIDIA has pulled back the curtain on its latest creation, the Blackwell B200 GPU. Hailed as the "world's most potent chip" for artificial intelligence (AI), this new GPU is poised to bolster NVIDIA's supremacy in the AI chip market. The company asserts that the Blackwell B200 GPU can deliver an impressive 20 petaflops of FP4 horsepower from its 208 billion transistors and is designed to make trillion-parameter AI accessible to all.

Performance leap

AI efficiency and performance

According to NVIDIA, their newly minted Blackwell B200 GPU can drastically slash cost and energy consumption by up to 25 times compared to its predecessor, the H100. Training a colossal 1.8 trillion parameter model, which previously necessitated 8,000 Hopper GPUs and a whopping 15 megawatts of power, can now be achieved with a mere 2,000 Blackwell GPUs consuming just four megawatts. This leap in efficiency is anticipated to further cement NVIDIA's dominance in the AI chip market.

Upgrades

Key improvements in Blackwell B200 GPU

NVIDIA has highlighted two key enhancements in the Blackwell B200 GPU. The first is a second-generation transformer engine that doubles compute power, bandwidth, and model size by utilizing four bits for each neuron instead of eight. The second notable upgrade comes into play when large numbers of these GPUs are networked together: an advanced NVLink switch allows 576 GPUs to communicate seamlessly with each other, offering a staggering 1.8 terabytes per second of bidirectional bandwidth.

Insights

NVIDIA's new network switch chip and GB200 NVL72

To enable improved communication between GPUs, NVIDIA had to engineer a brand-new network switch chip packed with 50 billion transistors and its own onboard compute of 3.6 teraflops of FP8. Furthermore, the company is introducing larger designs like GB200 NVL72, which integrates 36 CPUs and 72 GPUs into a single liquid-cooled rack for a total AI training performance of 720 petaflops or an astounding 1.4 exaflops of inference. This development underscores NVIDIA's dedication to pushing the envelope in AI technology.

Facts

Plans to offer NVL72 racks in cloud services

Major tech players including Amazon, Google, Microsoft, and Oracle are reportedly gearing up to incorporate the NVL72 racks into their cloud service offerings. Each rack can support a massive 27 trillion parameter model, highlighting its significant capacity for AI processing. However, the exact number of racks these companies plan to procure from NVIDIA remains undisclosed at this point.

Findings

NVIDIA's comprehensive solution: the DGX Superpod

Beyond the NVL72 racks, NVIDIA is proposing a comprehensive solution with the DGX Superpod for DGX GB200. This system amalgamates eight units into one, providing an aggregate of 288 CPUs, 576 GPUs, 240TB of memory, and an astonishing 11.5 exaflops of FP4 computing power. According to NVIDIA, their systems can scale up to tens of thousands of GB200 superchips interconnected with their new Quantum-X800 InfiniBand or Spectrum-X800 ethernet for high-speed networking.

Use cases

Blackwell GPU architecture to power future RTX 50-series

While the spotlight was primarily on AI and GPU computing during the announcement, NVIDIA's new Blackwell GPU architecture is also projected to fuel a future lineup of desktop graphics cards. The RTX 50-series could potentially reap the benefits of this new technology, although specific details about these gaming GPUs were not divulged during the announcement. This indicates that NVIDIA's strides in AI technology will continue to shape its broader product range.