MIT's AI-inspired approach lets robots learn faster

What's the story

Researchers at MIT have unveiled a novel approach to training robots, inspired by the success of large language models (LLMs). The new method differs from the conventional approach of using a particular data set to teach tasks. Instead, it uses vast amounts of diverse data, much like LLMs, to accelerate the process of teaching robots new skills. The research team found that imitation learning can fail when small changes are introduced due to lack of data for adaptation.

Innovative approach

New architecture for robot training: Heterogeneous pretrained transformers

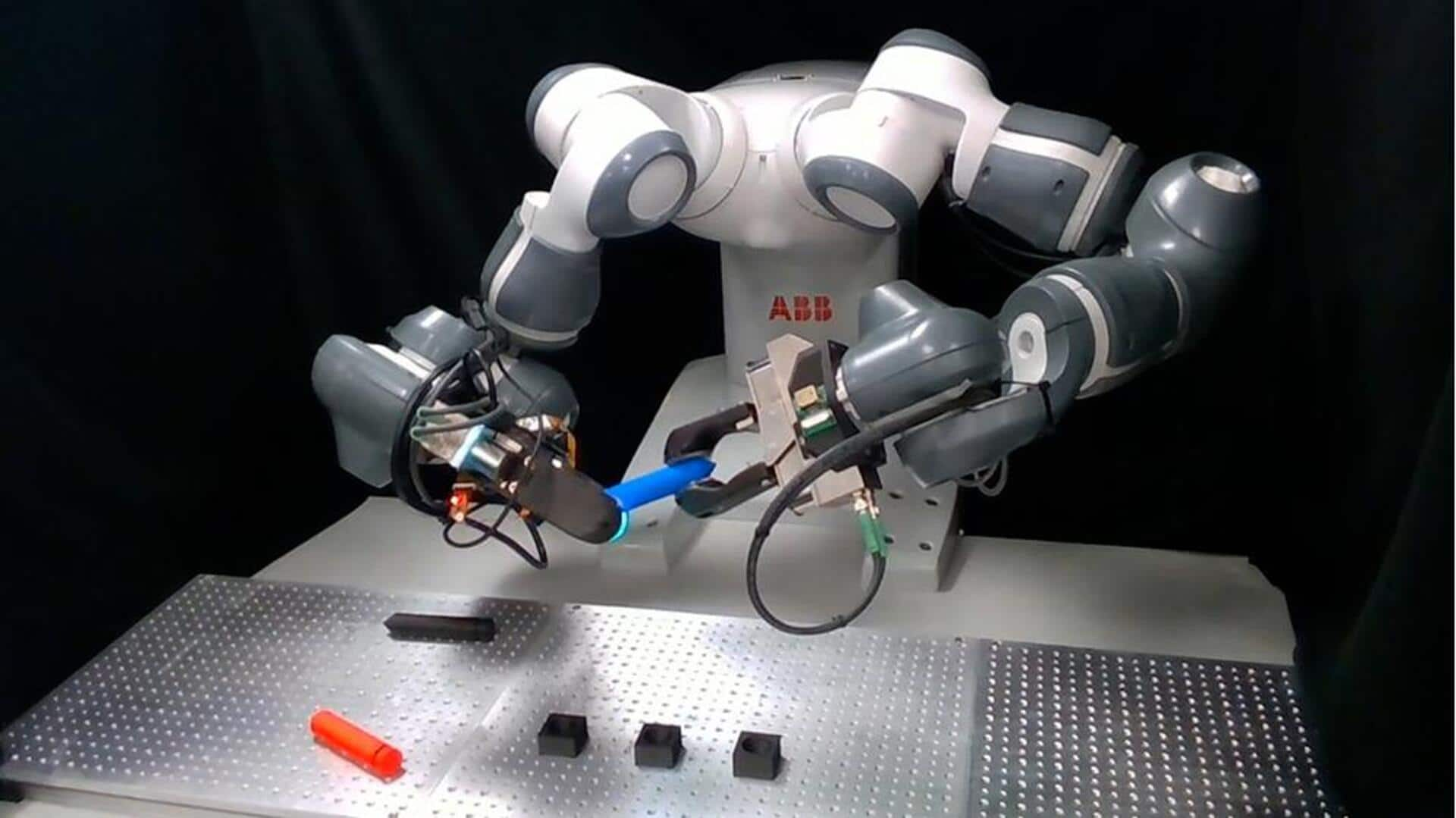

To overcome the shortcomings of traditional methods, the research team created a new architecture called Heterogeneous Pretrained Transformers (HPT). This system combines data from different sensors and environments, using a transformer to collate this information into training models. The larger the transformer, the more effective these models become. "In robotics, given all the heterogeneity in the data, if you want to pretrain in a similar manner, we need a different architecture," said Lirui Wang, lead author of the study.

Aspiration

The ultimate goal: A universal robot brain

The HPT system lets users enter the design and configuration of the robot and the task they want it to perform. The end goal of this research is to develop a "universal robot brain" that could be downloaded and used without any prior training. "Our dream is to have a universal robot brain that you could download and use for your robot without any training at all," CMU associate professor David Held said.

Collaboration

Toyota Research Institute's involvement and future plans

The research was partially funded by the Toyota Research Institute (TRI), which had previously showcased a way to train robots overnight at TechCrunch Disrupt. Just recently, TRI had signed a major partnership to merge its robot learning research with the hardware of Boston Dynamics. This just goes on to show how far we can go with robotic training methods.