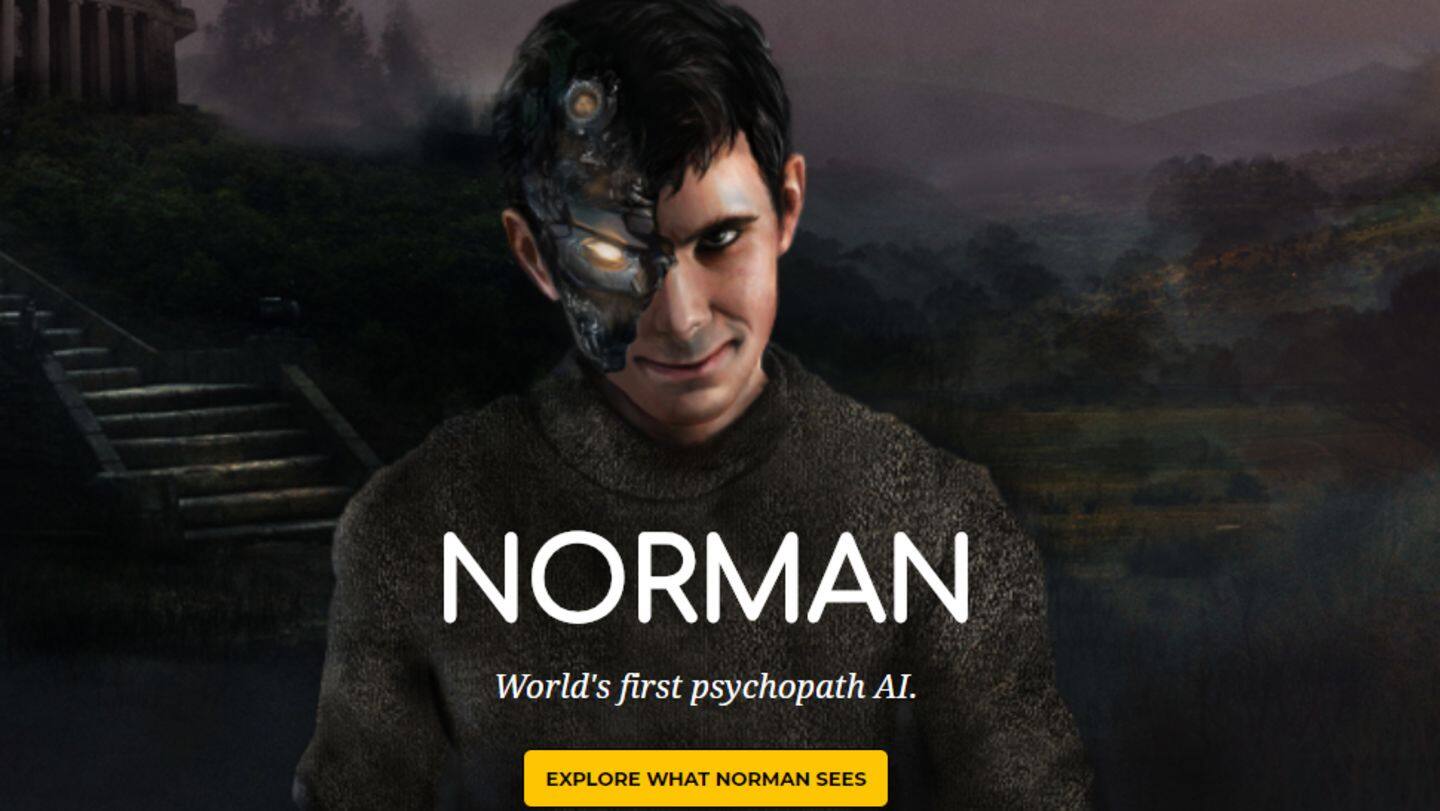

Meet Norman: MIT's "psychopath" AI engine which only thinks murder

What's the story

The advent of artificial intelligence (AI) is upon us and there's no way to reverse the tide. While AI symbolizes utopian hopes of an automated future for some, many view the technology with apprehension, out of fear of AI eventually overthrowing humanity. If you fall in the second group, there's disturbing news - MIT scientists have created an AI engine, Norman, which sees only murder.

Norman

The Norman AI gets its name from Hitchcock's "Psycho"

Norman, which is an AI trained to carry out image captioning operations, gets its name from the murderous Norman Bates from Alfred Hitchcock's "Psycho", and sure lives up to it. Trained using written captions describing graphic images and videos on death and murder from the "darkest corners" of Reddit, Norman sees slaughter where other AI systems see birds or umbrellas.

Rorschach test

Norman only saw murder when subjected to the Rorschach test

The MIT team subjected Norman to the Rorschach inkblot tests, and compared its results to that of a standard image captioning neural network. Where the standard AI saw a bird, a baseball glove, and a person holding an umbrella, Norman saw a man get pulled into a dough machine, a man getting murdered by a machine gun, and a man being shot dead, respectively.

Implications

The project demonstrates the consequence of algorithmic bias

Yet, it's not Norman's interpretation of Rorschach inkblots that's disturbing - it's the implications of the research project. What Norman effectively demonstrates is the consequence of algorithmic bias. Simply put, Norman demonstrates that the data used to train an AI system essentially determines how an AI behaves - if AI is trained on biased data, it'll behave in a biased manner.

Quote

Norman: A case study on the dangers of biased AI

"Norman suffered from extended exposure to the darkest corners of Reddit, and represents a case study on the dangers of artificial intelligence gone wrong when biased data is used in machine learning algorithms," wrote the MIT researchers.

AI research

It's important to keep asking questions in AI research

Although we're still far away from creating artificial general intelligence (AGI) of sci-fi movies, the widespread use of AI algorithms today makes its potential misuse a grave threat. Thus, while Norman is only a thought experiment and won't be killing people any time soon, it's important to keep asking such questions in the field of AI research to try and understand AI thought patterns.