Ban killer robots, "we do not have long to act"

What's the story

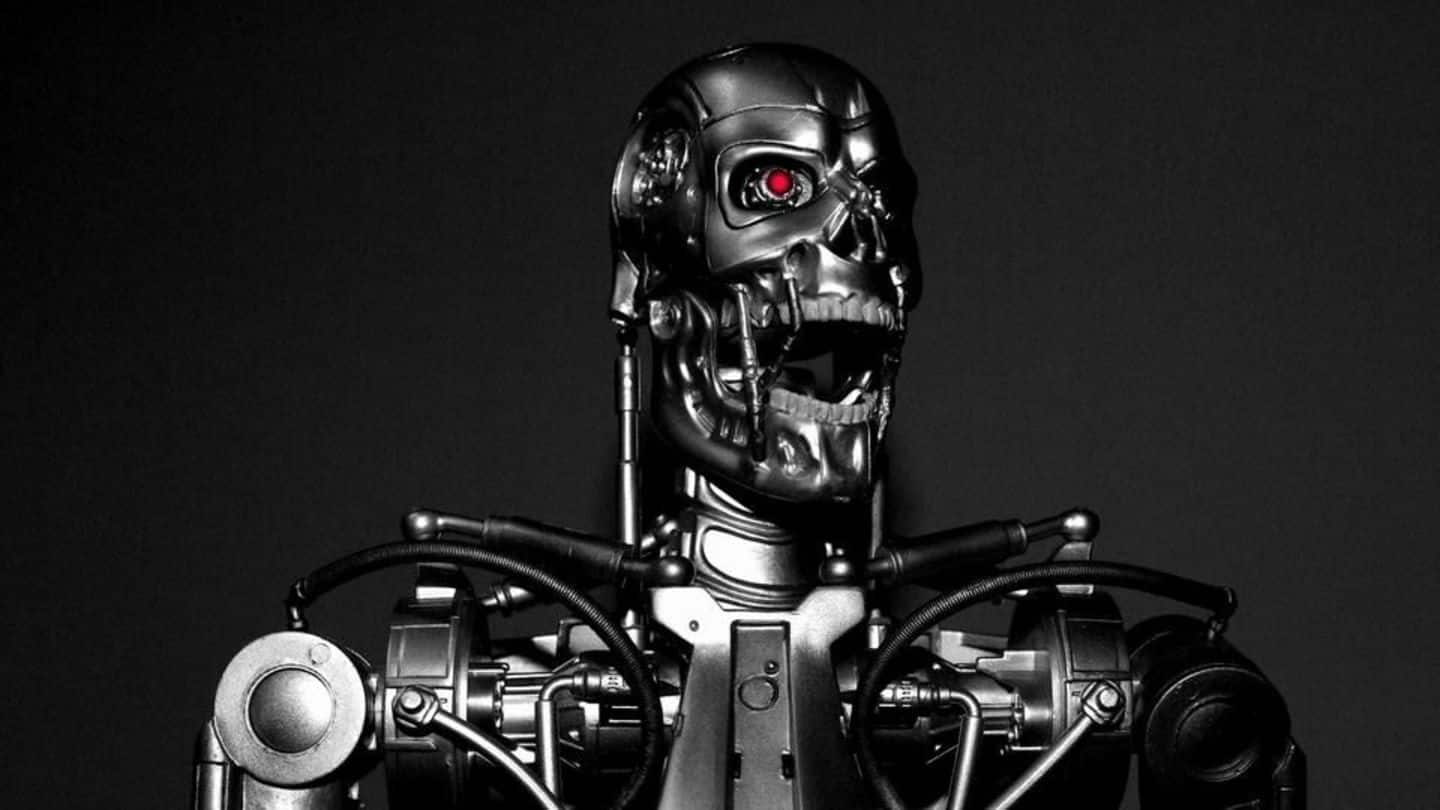

The Terminator fear, aka watching robots chomping off humanity without blinking an eye, is pretty real and the UN should act on it.

I am not saying it. Hundred leading robotics experts are saying it and they have penned a letter warning about "a third revolution in warfare".

And yes, our favorite doomsday speaker Elon Musk is on the list.

Here's more about it.

United Nations

What's it all about?

In this letter to the United Nations, the artificial intelligence (AI) leaders have warned that "lethal autonomous" technology is nothing but the "Pandora's box" and if humanity goes on prodding it, chaos will ensue.

The experts want an immediate ban on the research of AI for managing weaponry sans human guidance. It's a ticking clock and immediate actions should be taken without any dillydallying.

Letter

How bad is it?

The prophetic letter warns about the threat that's looming in near future, saying, "These can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways."

Therefore, these experts want the authorities to put this "morally wrong" technology in the list of weapons banned under UN Convention on Certain Conventional Weapons (CCW).

Signatories

Does it deserve any merit?

The letter bears strong signatures.

Mustafa Suleyman, Google's DeepMind co-founder, Tesla co-founder and CEO Elon Musk and some Indian experts, such as Founder & CTO of Gridbots Technologies, Pulkit Gaur, Founder & CTO of Vanora Robots Shahid Memom, have signed this document demanding an immediate action against these killer robots.

It's because "Once this Pandora's Box is opened, it will be hard to close."

Ban

What is the likely outcome?

In 2015, a similar letter was signed by more than 1,000 tech experts warning the world about autonomous weaponry. Stephen Hawking, Apple co-founder Steve Wozniak, and Elon Musk, among others, had signed it.

A ban on "killer robot" technology had earlier been discussed by UN committees. Chances are it will be brought again after this letter.