Google open-sources SynthID, its watermarking tool for AI-generated text

What's the story

Google has announced the open-source availability of SynthID text watermarking technology. The tool, designed to identify AI-generated text, is now accessible via Google Responsible Generative AI Toolkit. The move aims to help developers determine if their large language models (LLMs) have produced specific text outputs. Pushmeet Kohli, Vice President of Research at Google DeepMind, highlighted the significance of SynthID in an interview with MIT Technology Review, saying the tech would "make it easier for more developers to build AI responsibly."

Information

AI watermarking gains attention amid rising misuse

Watermarks are gaining importance as large language models are exploited to spread political misinformation, create nonconsensual sexual content, and for other harmful uses. California is considering mandatory AI watermarking, following China's lead, which enforced it last year. However, these tools remain under development.

Working principle

SynthID's mechanism and its global implications

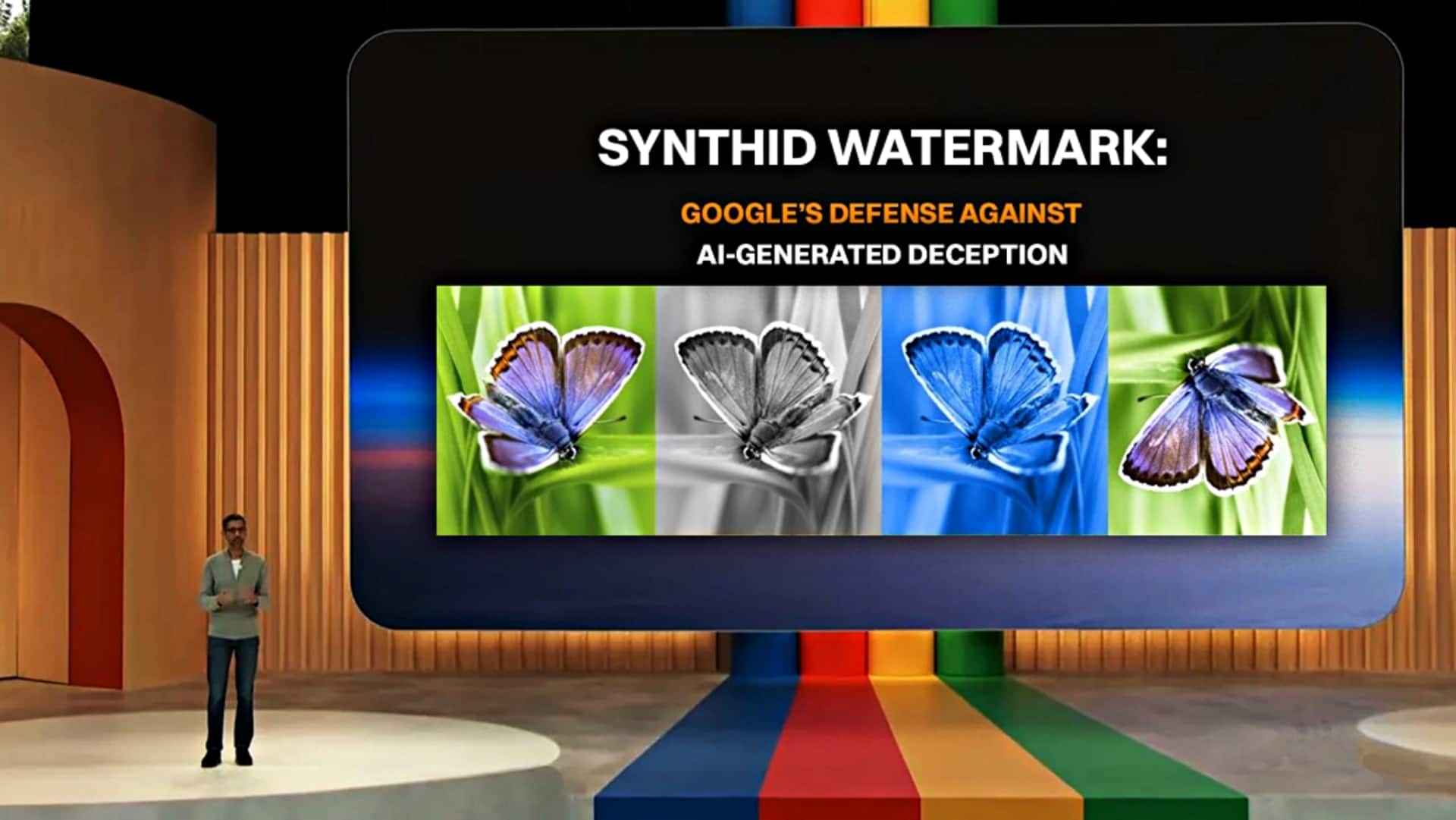

SynthID works by subtly modifying the probability of text outputs in a way that software can detect but humans cannot. This is done by adjusting the probability scores of every predicted token when generating text, without compromising on output quality, accuracy, or creativity. The unique pattern of these adjusted scores creates the watermark.

Tool application

Integration and limitations

Google has successfully integrated SynthID into its Gemini chatbot without compromising the quality, accuracy, creativity, or speed of the generated text. The system can work with text as short as three sentences and can even work with cropped, paraphrased, or modified text. However, it struggles with short texts that have been rewritten or translated and factual question answers.

Tool impact

Role in AI content identification

Despite its limitations, Google insists that SynthID is key to developing trustworthy AI identification tools. The company said in a blog post, "SynthID isn't a silver bullet for identifying AI-generated content, [But it] is an important building block for developing more reliable AI identification tools and can help millions of people make informed decisions about how they interact with AI-generated content."