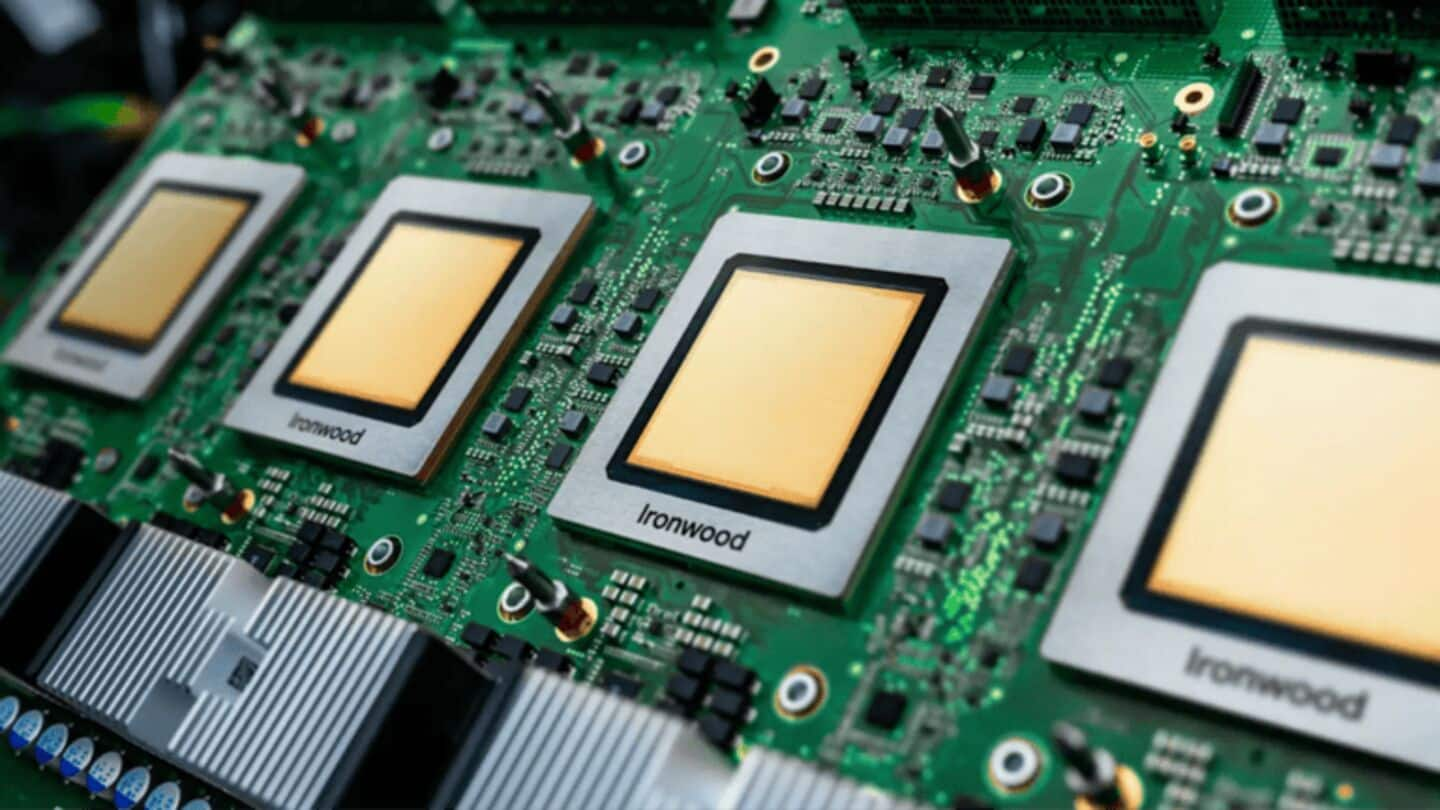

Google unveils Ironwood, its most advanced AI chip yet

What's the story

Google has unveiled Ironwood, the newest member of its Tensor Processing Unit (TPU) family of accelerator chips.

The seventh-generation TPU has been specifically optimized for inference tasks, that is running AI models.

It will be released later this year and will be available to Google Cloud customers in two configurations: a 256-chip cluster and a 9,216-chip cluster.

Google's Cloud VP Amin Vahdat described Ironwood as the company's "most powerful, capable, and energy-efficient TPU yet."

Use

Ironwood is 'purpose-built'

Vahdat emphasized that Ironwood is "purpose-built to power thinking inferential AI models at scale." The launch comes as major tech companies like Amazon and Microsoft are also developing their own proprietary solutions for AI acceleration.

Metrics

A look at the performance

According to Google's internal benchmarks, Ironwood can deliver a peak computing power of 4,614 TFLOPs.

Each chip comes with 192GB of dedicated RAM and a bandwidth nearing 7.4Tbps.

The chip also comes with an enhanced specialized core, SparseCore, optimized for processing data types prevalent in advanced ranking and recommendation workloads, like personalized shopping suggestions.

Design

Ironwood's architecture reduces latency

Google has designed Ironwood's TPU architecture to minimize data movement as well as latency on-chip. This way, the company is able to reduce power consumption.

Vahdat also revealed that the company plans to integrate Ironwood with its AI Hypercomputer, a modular computing cluster within the Google Cloud, in the near future.