Facebook establishing task force to thwart election-related interference in India

What's the story

As India gears up for 2019 Lok Sabha elections, social media giant Facebook has announced plans to bring a dedicated task force of content specialists into action.

The team, as the company said, will monitor the news on the platform and prevent it from being abused in relation to elections-related interference.

Here are the details.

Task force details

Hundreds of Facebook specialists to monitor content

The plan for the new task force, which is still being formed and said to have hundreds of specialists, was announced at a recent workshop on Facebook community standards.

It will comprise content and security specialists who will work with political parties and look at different ways in which election-related abuse could happen in India.

The 'task'

Differentiating between real news and propaganda

Facebook already has a dedicated team to maintain community standards, but this group - formed from existing employees and new recruits - will moderate content from the perspective of politics.

As part of this, it will analyze political news and conduct fact-checks to determine if it is real or some political propaganda.

The team, as Facebook said, will be mainly based in India.

Quote

Statement from Facebook's VP

Announcing the new task force, Allan Richard, Facebook's VP for public policy said, "Facebook wants to help countries around the world, including India, to conduct free and fair elections".

Facebook's problems

However, these are tough times for Facebook

Facebook's move comes as it continues to deal with the aftermath of a major security breach.

The social network has been battling a series of privacy-related issues and has also been embroiled in a controversy related to Russian interference in the 2016 US Presidential elections.

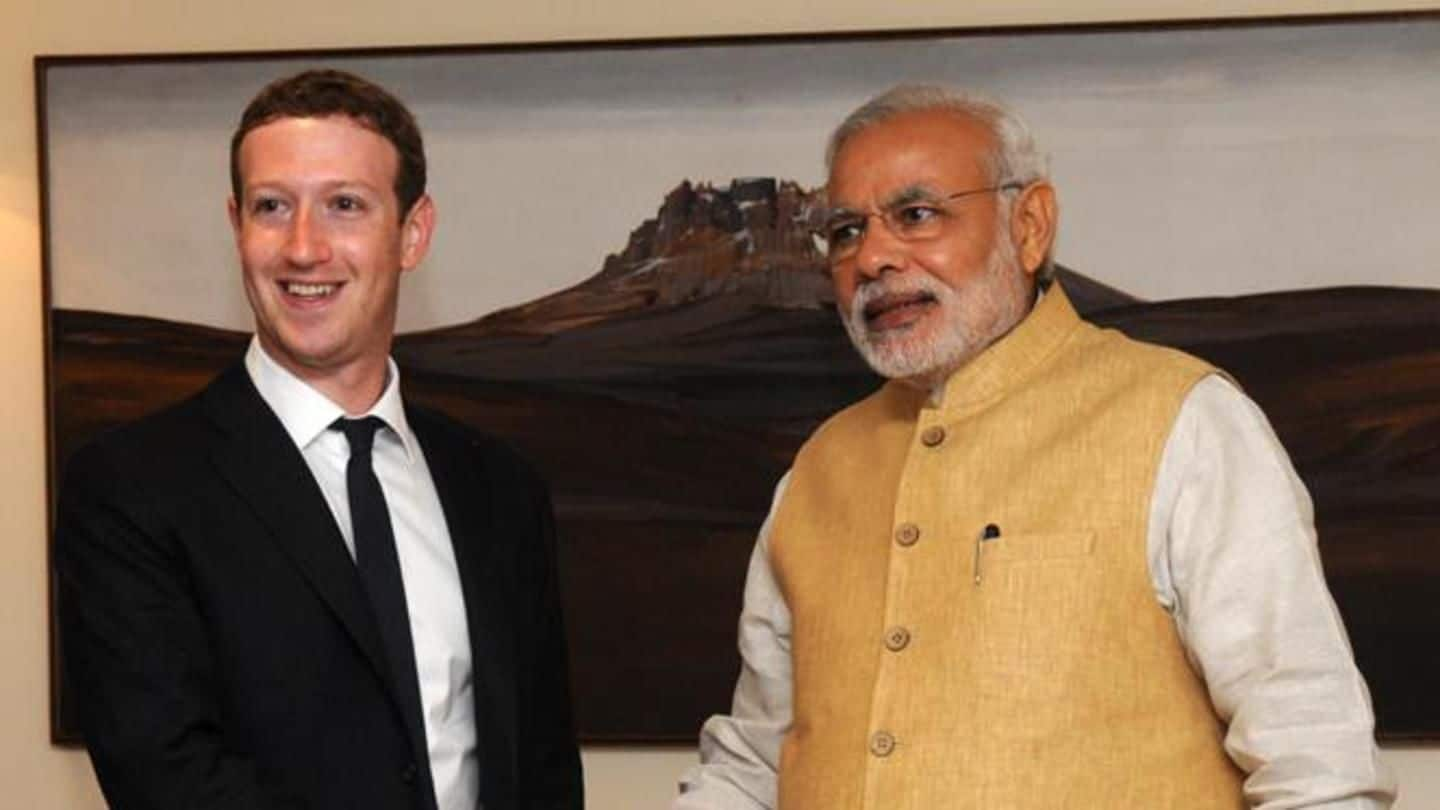

In April, Mark Zuckerberg told lawmakers that Facebook would ensure its platform is not misused to influence future elections.

Current techniques

Facebook's current moderation techniques

As of now, Facebook uses AI and machine learning-based tools as well as community reports to flag policy-violating content on its platform.

Each user report goes to Facebook's 'Community Operations' team, which reviews content in 12 Indian languages and determines if it's abusive.

By the end of 2018, the size of this team will be increased to 20,000 members.