Google announces a 27-billion-parameter AI model named Gemma 2

What's the story

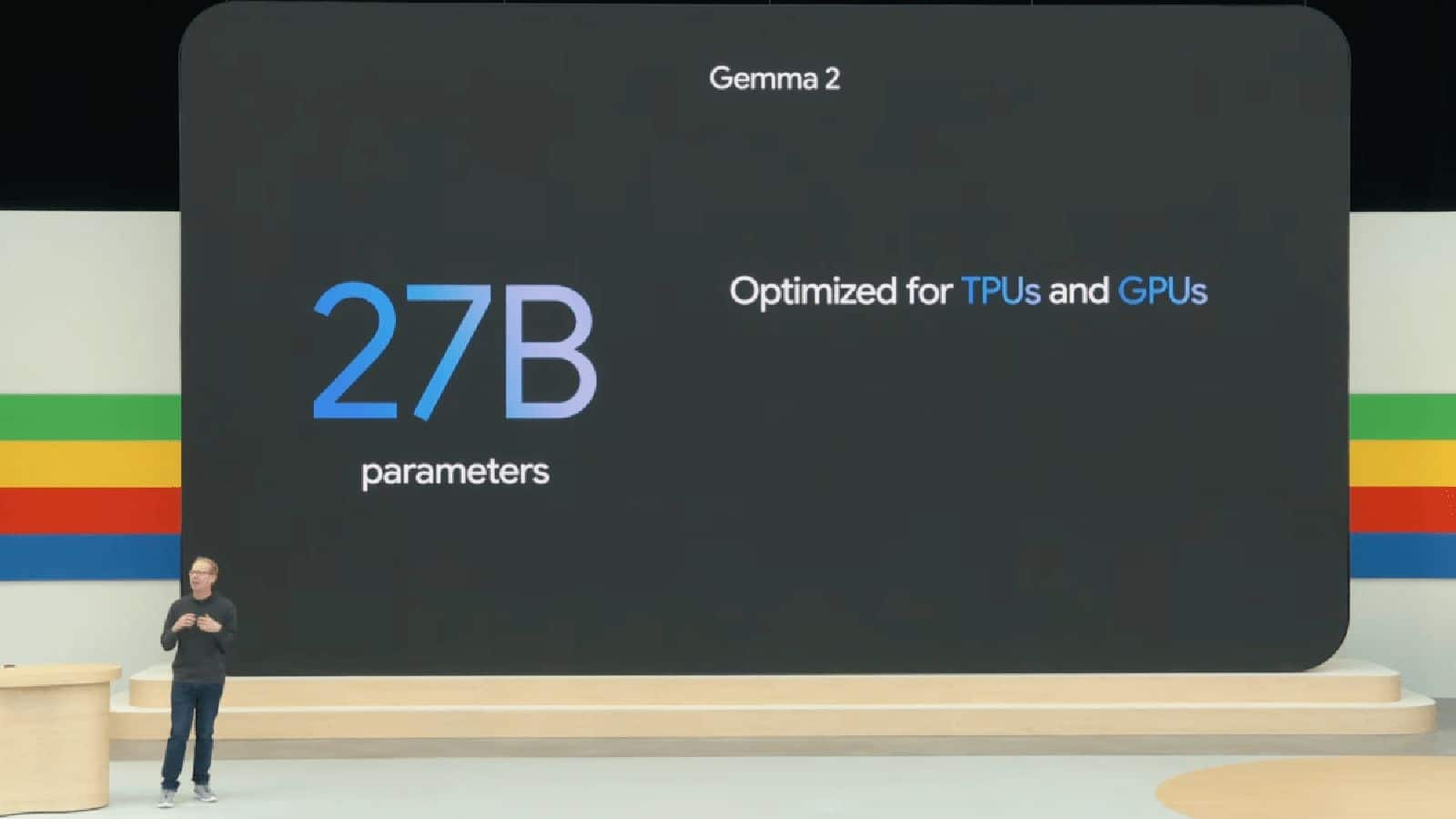

Google has unveiled several new additions to its Gemma family of AI models at the I/O 2024 developer conference. The most significant release is Gemma 2, the next iteration of Google's open-weight Gemma models, set to launch in June. This model boasts a staggering 27 billion parameters, a substantial increase from the standard Gemma models launched earlier this year, with only two-billion-parameter and seven-billion-parameter versions.

New version

Google introduces PaliGemma, a pre-trained variant

In addition to Gemma 2, Google has also launched PaliGemma, a pre-trained variant of the Gemma model. Described by Google as "the first vision language model in the Gemma family," PaliGemma is specifically designed for image captioning and labeling, as well as visual Q&A use cases. This new variant expands the capabilities of the existing Gemma models, and offers more specialized applications.

Tech optimization

Google optimizes Gemma models for next-gen GPUs

Josh Woodward, Google's VP of Google Labs, revealed that the Gemma models have been downloaded millions of times across various services. He further stated that the new 27-billion-parameter version has been optimized to run on NVIDIA's next-generation GPUs, a single Google Cloud TPU host, and the managed Vertex AI service. This optimization is expected to enhance the performance and efficiency of these models on advanced hardware platforms.