Privacy vs. performance: Making AI 'forget' has a high cost

What's the story

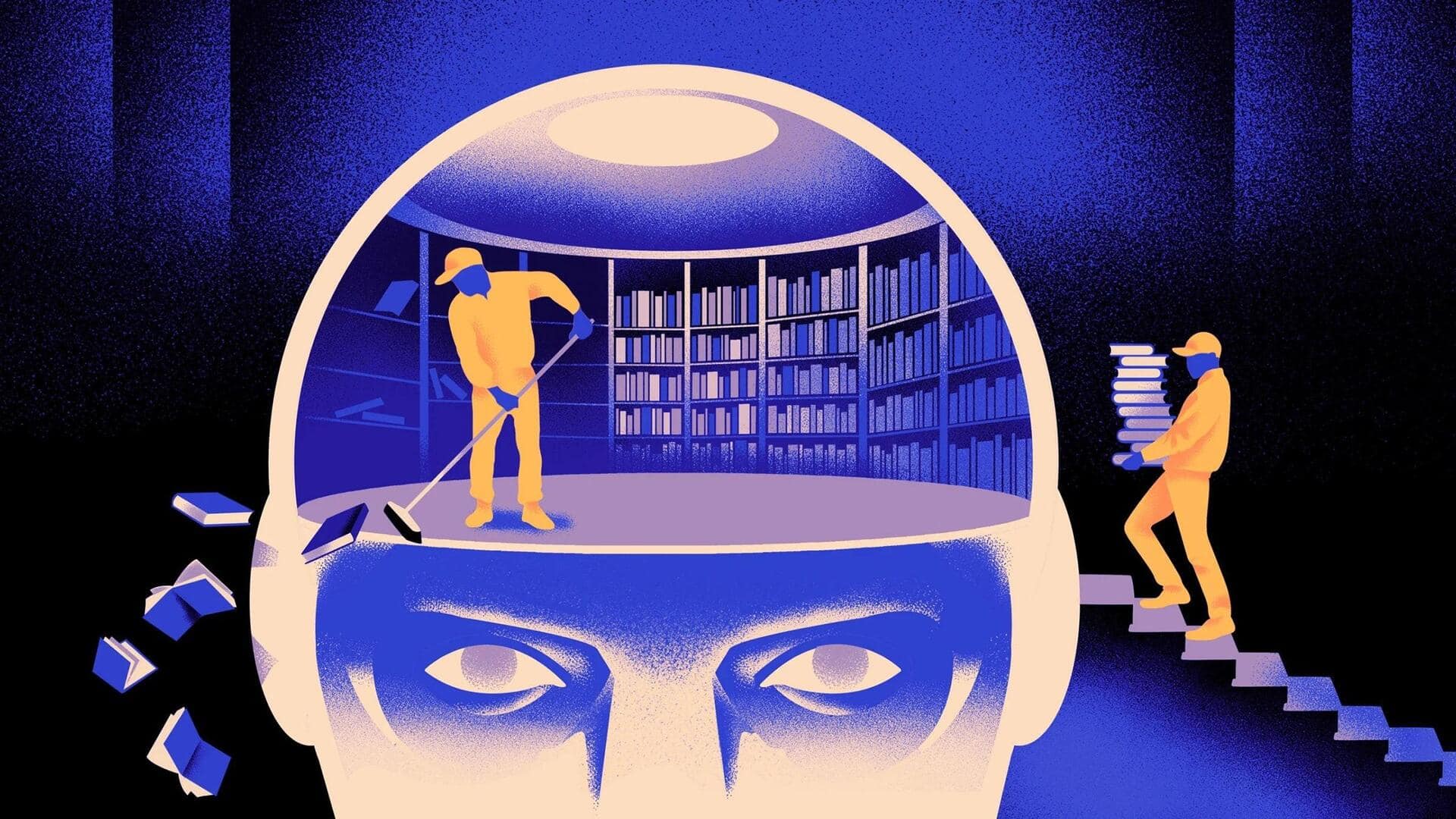

A recent study has revealed that the performance of generative AI models such as OpenAI's GPT-4o or Meta's Llama 3.1 405B, can be substantially compromised by "unlearning" techniques. These methods are employed to make AI models forget specific and undesirable information they have acquired from training data, like sensitive private data or copyrighted material. However, the study found that current unlearning methods often degrade models to the point where they become unusable.

Feasibility doubts

Researchers question the feasibility of unlearning methods

The study was conducted by researchers from the University of Washington (UW), Princeton, the University of Chicago, USC, and Google. Weijia Shi, a researcher on the study and a Ph.D. candidate in computer science at UW, stated, "Our evaluation suggests that currently feasible unlearning methods are not yet ready for meaningful usage or deployment in real-world scenarios." This statement underscores the challenges faced in implementing these techniques without compromising AI model performance.

AI functioning

Generative AI models and unlearning techniques explained

Generative AI models are statistical systems that predict words, images, speech, music, videos, and other data based on patterns in the training data they have ingested. These models do not possess real intelligence but make informed guesses based on the context of surrounding data. Unlearning techniques have gained attention recently due to copyright issues and privacy concerns associated with training data.

Method challenges

Unlearning methods: A potential solution with challenges

Unlearning could help remove sensitive information from existing models in response to a request or government order. However, current unlearning methods are not as simple as hitting "Delete." They rely on algorithms designed to steer models away from the data to be unlearned. This complexity adds another layer of challenge to the implementation of these techniques.

Algorithm assessment

Evaluating the effectiveness of unlearning algorithms

To assess these unlearning algorithms' effectiveness, Shi and her team created a benchmark called MUSE (Machine Unlearning Six-way Evaluation). This benchmark tests an algorithm's ability to prevent a model from regurgitating training data verbatim and erase the model's knowledge of that information along with any evidence that it was originally trained on the data. The researchers discovered that while unlearning algorithms did make models forget specific data, they also hurt the models' general question-answering capabilities.

Unlearning intricacy

The complexity of unlearning in AI models

"Designing effective unlearning methods for models is challenging because knowledge is intricately entangled in the model," Shi explained. The study concluded that there are currently no efficient methods to make a model forget specific data without a significant loss of utility. This finding underscores the necessity for further research in this field. It indicates that AI firms seeking to use unlearning to address their training data issues must explore alternative methods to prevent their models from producing undesirable outputs.