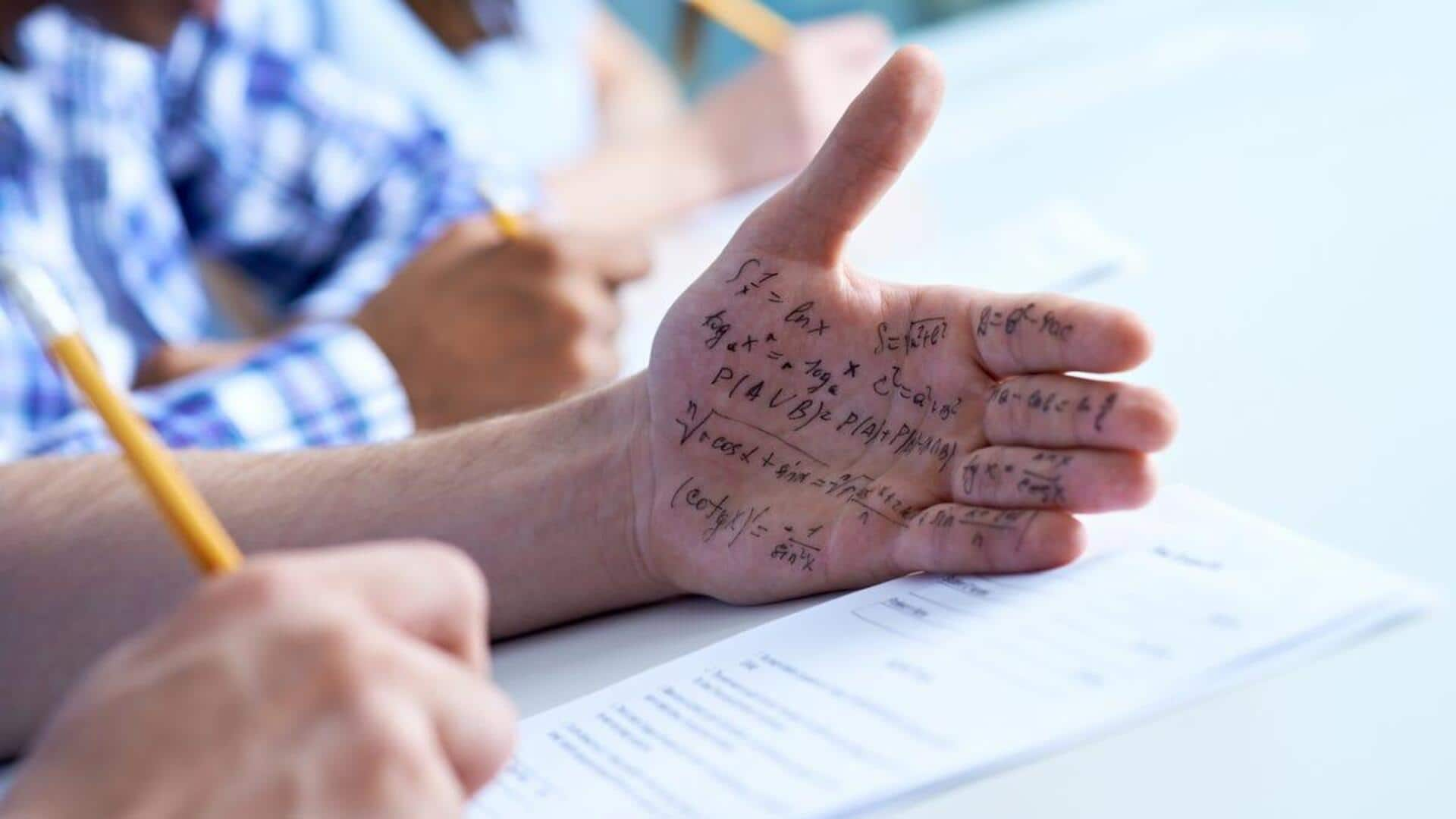

Is AI making it easier for students to cheat?

What's the story

A study by publishing firm Wiley, has revealed growing concerns about the potential misuse of generative artificial intelligence (AI) in academic settings. Its survey involved over 850 instructors and 2,000 students, with a significant number expressing fears that such technology could be used to cheat. About 68% of instructors and nearly half (47%) of the students surveyed, believe that generative AI could negatively impact academic integrity.

Impact

AI tools like ChatGPT seen as enablers of academic dishonesty

The study further revealed that 35% of students identified ChatGPT, an AI tool developed by OpenAI, as a significant contributor to the perceived increase in academic dishonesty. This aligns with previous concerns raised by academics when the tool was launched in November 2022. An Inside Higher Ed survey conducted earlier this year, found that nearly half of university heads were worried about generative AI's threat to academic integrity.

Usage trends

Evolving perception in academics

Despite initial apprehensions leading many institutions to ban such tools, restrictions have been relaxed over time as attitudes toward the technology evolved. However, a majority of instructors still believe that AI could negatively affect academic integrity in the next three years. The Wiley survey showed that student use of generative AI significantly outpaced faculty use, with 45% of students using AI in their classes compared to just 15% of teachers.

Trust issues

Distrust and avoidance of AI tools among students

The survey revealed that 36% of students did not trust AI tools, while slightly more (37%) stated they avoided using them due to fears their instructor would suspect them of cheating. The top reason for students disliking generative AI was its potential to facilitate cheating (33%). In contrast, only 14% of faculty cited potential for cheating as a reason for their dislike, with the majority (37%) stating that the technology negatively impacts critical thinking.

Countermeasures

Proposed strategies to maintain academic integrity

Lyssa Vanderbeek, Vice President of courseware at Wiley, suggested three strategies institutions could implement to uphold academic integrity. These include incentivizing early work start, introducing randomization on exams to make finding answers online harder, and providing tools to identify suspicious behavior like copied content or submissions from overseas IP addresses. She emphasized that "there is still a lot to learn," viewing these challenges as opportunities for generative AI to aid instructors in ways currently not possible.