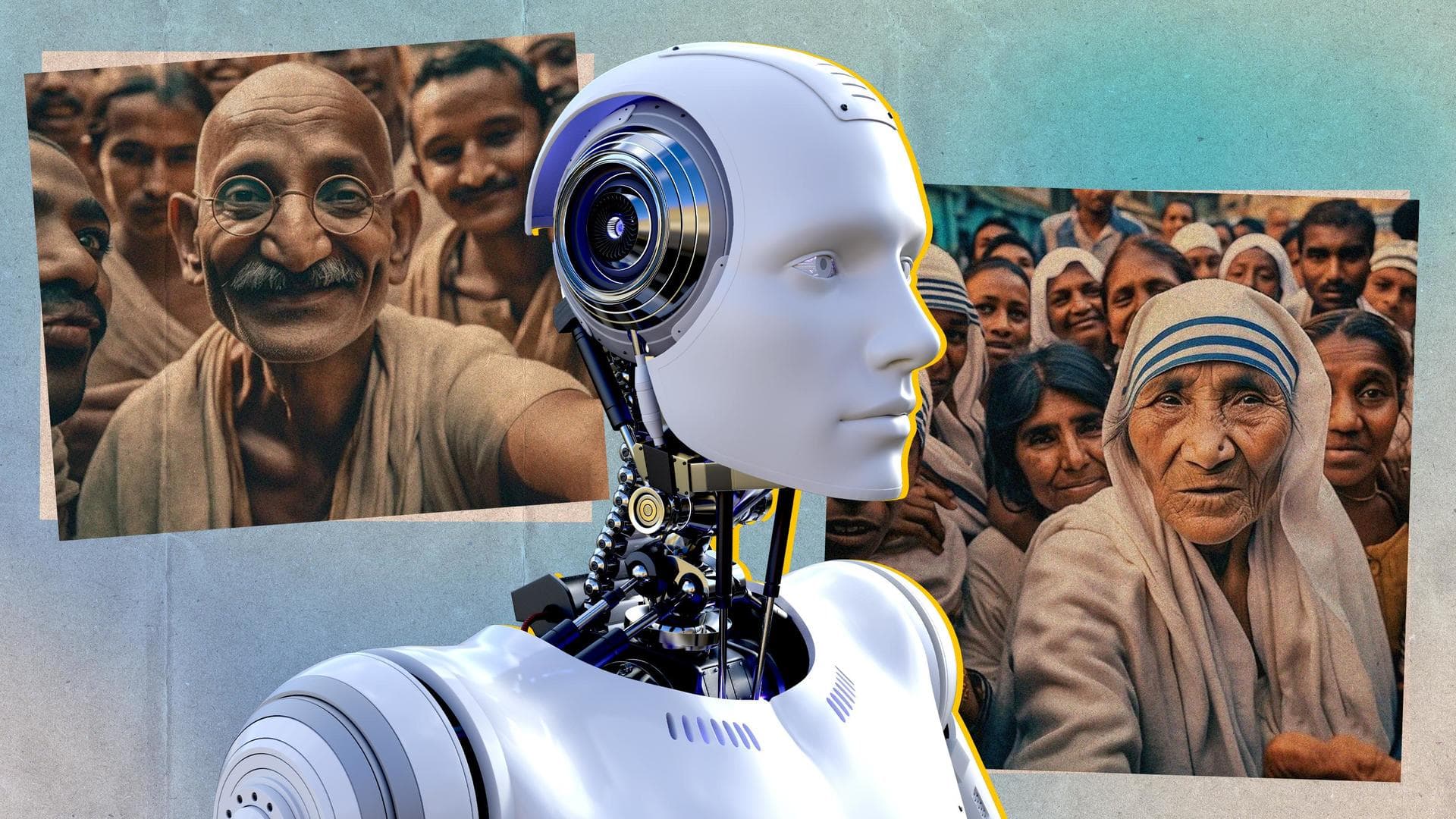

AI-generated images and the blurring lines between real and fake

What's the story

Hours after being spotted with Mary Barra, the CEO of General Motors, Elon Musk was seen holding hands with Alexandria Ocasio-Cortez.

However, Musk was with neither of them. The pictures were created by an AI program, but at least a few might have misunderstood them for real.

This brings us to the question, has AI blurred the line between real and fake?

Context

Why does this story matter?

The age of AI is upon us. We have been talking a lot about ChatGPT and its text-to-text alternatives, but what about text-to-image AIs?

OpenAI's DALL-E, Stable Diffusion, Midjourney... these AI tools are capable of creating hyper-real images from a simple prompt.

The implications of such a technology are far-reaching, especially in an era where information spreads like wildfire.

Text-to-image

Text-to-image AI models are trained on large datasets

Text-to-image AI technology, like text-to-text technology, involves training machine learning models to analyze and understand natural language text and then translate that text into a corresponding visual representation.

These tools are trained on large datasets of text-image pairings. The dataset is used to teach the AI how to generate accurate and visually coherent images based on textual descriptions.

Sense of reality

It has become difficult to identify fake from real

Text-to-image tools are consistently evolving. These tools have made it increasingly difficult to recognize what is real and what is fake.

A few days ago, photos of Donald Trump being arrested by the New York City police flooded social media. Although it wasn't remotely real, the highly detailed image and news about Trump facing criminal charges made it plausible.

Lack of context

Lack of context makes it even more difficult

The plausibility is enough to create a sense of panic when the situation is such. Images of Trump's arrest spread like wildfire.

However, most people shared those images without any context, making it even harder to understand that they were created by AI.

It has become increasingly hard to discern the AI origin of certain images without explicit mention.

Issues

The cues to understand fake images will disappear slowly

There are still certain cues and properties that can be spotted to understand whether an image was created by AI. However, to an untrained eye or a casual user, they might appear real.

With AI technology evolving every minute, these cues will slowly disappear. Once they do, the now blurred lines will become non-existent, making real and fake almost the same.

Twitter Post

Examine the fingers to understand if AI images are fake

BREAKING: Hours after being seen with Mary Barra, Elon Musk was spotted with AOC pic.twitter.com/3jZfP7jZlD

— AllYourTech (@blovereviews) March 26, 2023